环境

| 主机名 | IP | 角色 | 说明 |

|---|---|---|---|

| master01 | 10.0.0.101 | master节点 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、kube-proxy、nfs-client |

| master02 | 10.0.0.102 | master节点 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、kube-proxy、nfs-client |

| master03 | 10.0.0.103 | master节点 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、kube-proxy、nfs-client |

| node01 | 10.0.0.110 | node节点 | kubelet、kube-proxy、nfs-client |

| node02 | 10.0.0.111 | node节点 | kubelet、kube-proxy、nfs-client |

| 10.0.0.180 | VIP |

Service CIDR: 10.96.0.0/12

Pod CIDR: 10.244.0.0/12

基础环境配置

IP配置

需要说明的是如果是克隆的主机,网卡UUID需要重新生成,不然IPV6是无法正常获取的。

必备工具

yum update -y && yum -y install wget psmisc vim net-tools nfs-utils telnet yum-utils device-mapper-persistent-data lvm2 git tar curl关闭交换分区

sed -ri 's/.*swap.*/#&/' /etc/fstab

swapoff -a && sysctl -w vm.swappiness=0关闭NetworkManager网络管理

或者你也可以增加规是把cali和tunl开头的接口排除,不能让它管理。

systemctl disable --now NetworkManager

systemctl start network && systemctl enable network时间同步

所有节点时间同步这个就没啥好说的了。

配置ulimit

ulimit -SHn 65535

cat >> /etc/security/limits.conf <<EOF

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* seft memlock unlimited

* hard memlock unlimitedd

EOF关闭防火墙

systemctl stop firewalld

systemctl disable firewalld关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

setenforce 0 # 临时升级内核

添加源

yum install https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpm -y

sed -i "s@mirrorlist@#mirrorlist@g" /etc/yum.repos.d/elrepo.repo

sed -i "s@elrepo.org/linux@mirrors.tuna.tsinghua.edu.cn/elrepo@g" /etc/yum.repos.d/elrepo.repo 查看可用的包

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available升级到kernel-ml稳定版

yum -y --enablerepo=elrepo-kernel install kernel-ml

rpm -qa | grep kernel # 查看已安装那些内核

grubby --default-kernel # 查看默认内核

grubby --set-default $(ls /boot/vmlinuz-* | grep elrepo) # 若不是最新的使用命令设置

reboot安装ipvsadm

yum install ipvsadm ipset sysstat conntrack libseccomp -y

cat >> /etc/modules-load.d/ipvs.conf <<EOF

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

systemctl restart systemd-modules-load.service

lsmod | grep -e ip_vs -e nf_conntrack修改内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

net.ipv6.conf.all.disable_ipv6 = 0

net.ipv6.conf.default.disable_ipv6 = 0

net.ipv6.conf.lo.disable_ipv6 = 0

net.ipv6.conf.all.forwarding = 1

EOF

sysctl --system

参数详解

这些是Linux系统的一些参数设置,用于配置和优化网络、文件系统和虚拟内存等方面的功能。以下是每个参数的详细解释:

net.ipv4.ip_forward = 1

这个参数启用了IPv4的IP转发功能,允许服务器作为网络路由器转发数据包。

net.bridge.bridge-nf-call-iptables = 1

当使用网络桥接技术时,将数据包传递到iptables进行处理。

fs.may_detach_mounts = 1

允许在挂载文件系统时,允许被其他进程使用。

vm.overcommit_memory=1

该设置允许原始的内存过量分配策略,当系统的内存已经被完全使用时,系统仍然会分配额外的内存。

vm.panic_on_oom=0

当系统内存不足(OOM)时,禁用系统崩溃和重启。

fs.inotify.max_user_watches=89100

设置系统允许一个用户的inotify实例可以监控的文件数目的上限。

fs.file-max=52706963

设置系统同时打开的文件数的上限。

fs.nr_open=52706963

设置系统同时打开的文件描述符数的上限。

net.netfilter.nf_conntrack_max=2310720

设置系统可以创建的网络连接跟踪表项的最大数量。

net.ipv4.tcp_keepalive_time = 600

设置TCP套接字的空闲超时时间(秒),超过该时间没有活动数据时,内核会发送心跳包。

net.ipv4.tcp_keepalive_probes = 3

设置未收到响应的TCP心跳探测次数。

net.ipv4.tcp_keepalive_intvl = 15

设置TCP心跳探测的时间间隔(秒)。

net.ipv4.tcp_max_tw_buckets = 36000

设置系统可以使用的TIME_WAIT套接字的最大数量。

net.ipv4.tcp_tw_reuse = 1

启用TIME_WAIT套接字的重新利用,允许新的套接字使用旧的TIME_WAIT套接字。

net.ipv4.tcp_max_orphans = 327680

设置系统可以同时存在的TCP套接字垃圾回收包裹数的最大数量。

net.ipv4.tcp_orphan_retries = 3

设置系统对于孤立的TCP套接字的重试次数。

net.ipv4.tcp_syncookies = 1

启用TCP SYN cookies保护,用于防止SYN洪泛攻击。

net.ipv4.tcp_max_syn_backlog = 16384

设置新的TCP连接的半连接数(半连接队列)的最大长度。

net.ipv4.ip_conntrack_max = 65536

设置系统可以创建的网络连接跟踪表项的最大数量。

net.ipv4.tcp_timestamps = 0

关闭TCP时间戳功能,用于提供更好的安全性。

net.core.somaxconn = 16384

设置系统核心层的连接队列的最大值。

net.ipv6.conf.all.disable_ipv6 = 0

启用IPv6协议。

net.ipv6.conf.default.disable_ipv6 = 0

启用IPv6协议。

net.ipv6.conf.lo.disable_ipv6 = 0

启用IPv6协议。

net.ipv6.conf.all.forwarding = 1

允许IPv6数据包转发。

所有节点配置hosts

cat >> /etc/hosts << EOF

10.0.0.101 k8s-master01

10.0.0.102 k8s-master02

10.0.0.103 k8s-master03

10.0.0.110 node01

10.0.0.111 node02

EOF基础组件安装

本次我们不使用docker作为Runtime了,改用Containerd

安装Containerd

https://github.com/containernetworking/plugins/releases/

https://github.com/containernetworking/plugins/releases/download/v1.4.1/cni-plugins-linux-amd64-v1.4.1.tgz

#创建cni插件所需目录

mkdir -p /etc/cni/net.d /opt/cni/bin

#解压cni二进制包

tar xf cni-plugins-linux-amd64-v*.tgz -C /opt/cni/bin/

https://github.com/containerd/containerd/releases/

https://github.com/containerd/containerd/releases/download/v1.7.15/cri-containerd-1.7.15-linux-amd64.tar.gz

tar -xzf cri-containerd-cni-*-linux-amd64.tar.gz -C /

#创建服务启动文件

cat > /etc/systemd/system/containerd.service <<EOF

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/local/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=infinity

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

EOF配置Containerd所需的模块

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF加载模块

systemctl restart systemd-modules-load.service配置Containerd所需的内核

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

# 加载内核

sysctl --system创建Containerd的配置文件

# 创建默认配置文件

mkdir -p /etc/containerd

containerd config default | tee /etc/containerd/config.toml

# 修改Containerd的配置文件

sed -i "s#SystemdCgroup\ \=\ false#SystemdCgroup\ \=\ true#g" /etc/containerd/config.toml

cat /etc/containerd/config.toml | grep SystemdCgroup

sed -i "s#registry.k8s.io#m.daocloud.io/registry.k8s.io#g" /etc/containerd/config.toml

cat /etc/containerd/config.toml | grep sandbox_image

sed -i "s#config_path\ \=\ \"\"#config_path\ \=\ \"/etc/containerd/certs.d\"#g" /etc/containerd/config.toml

cat /etc/containerd/config.toml | grep certs.d

# 配置加速器

mkdir /etc/containerd/certs.d/docker.io -pv

cat > /etc/containerd/certs.d/docker.io/hosts.toml << EOF

server = "https://docker.io"

[host."404 Not Found"]

capabilities = ["pull", "resolve"]

[host."https://reg-mirror.qiniu.com"]

capabilities = ["pull", "resolve"]

[host."https://registry.docker-cn.com"]

capabilities = ["pull", "resolve"]

[host."http://hub-mirror.c.163.com"]

capabilities = ["pull", "resolve"]

EOF启动并设置为开机启动

systemctl daemon-reload

systemctl enable --now containerd.service

systemctl stop containerd.service

systemctl start containerd.service

systemctl restart containerd.service

systemctl status containerd.service配置crictl客户端连接的运行时位置

https://github.com/kubernetes-sigs/cri-tools/releases/

https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.29.0/crictl-v1.29.0-darwin-amd64.tar.gz

#解压

tar xf crictl-v*-linux-amd64.tar.gz -C /usr/bin/

#生成配置文件

cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

#测试

systemctl restart containerd

crictl info准备证书工具

wget "https://github.com/cloudflare/cfssl/releases/download/v1.6.4/cfssl_1.6.4_linux_amd64" -O /usr/local/bin/cfssl

wget "https://github.com/cloudflare/cfssl/releases/download/v1.6.4/cfssljson_1.6.4_linux_amd64" -O /usr/local/bin/cfssljson

[root@master01 opt]# ll /usr/local/bin/cfssl*

-rw-r--r-- 1 root root 12054528 Apr 11 2023 /usr/local/bin/cfssl

-rw-r--r-- 1 root root 7643136 Apr 11 2023 /usr/local/bin/cfssljson

#添加执行权限

chmod +x /usr/local/bin/cfssl*ETCD安装

https://github.com/etcd-io/etcd/releases/download/v3.5.13/etcd-v3.5.13-linux-amd64.tar.gz

mv etcd-v3.5.13-linux-amd64/etcd

mv etcd-v3.5.13-linux-amd64/etcd /usr/local/bin/

mv etcd-v3.5.13-linux-amd64/etcdctl /usr/local/bin/

[root@master01 opt]# etcdctl version

etcdctl version: 3.5.13

API version: 3.5准备ETCD证书

mkdir /opt/ssl/etcd -p写入生成证书所需的配置文件

自签CA:

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

cat > etcd-ca-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "etcd",

"OU": "Etcd Security"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

#生成证书

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare etcd-ca

使用自签CA办法Etcd证书

创建证书申请文件:

cat > etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"10.0.0.101",

"10.0.0.102",

"10.0.0.103"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF

cfssl gencert \

-ca=etcd-ca.pem \

-ca-key=etcd-ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare etcd将etcd证书复制到其他两个节点

for NODE in k8s-master02 k8s-master03; do scp -r etcd ${NODE}:/opt/ssl/pki/; done创建工作目录解压文件

mkdir /opt/etcd/{bin,cfg,ssl} -p

tar xvf etcd-v3.5.13-linux-amd64.tar.gz

mv etcd-v3.5.13-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

#复制证书文件

cp ssl/pki/etcd/{etcd.pem,etcd-key.pem,etcd-ca.pem} /opt/etcd/ssl/创建etcd配置文件

cat > /opt/etcd/cfg/etcd.config.yml << EOF

name: 'k8s-master01'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://10.0.0.101:2380'

listen-client-urls: 'https://10.0.0.101:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://10.0.0.101:2380'

advertise-client-urls: 'https://10.0.0.101:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://10.0.0.101:2380,k8s-master02=https://10.0.0.102:2380,k8s-master03=https://10.0.0.103:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/opt/etcd/ssl/etcd.pem'

key-file: '/opt/etcd/ssl/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/opt/etcd/ssl/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/opt/etcd/ssl/etcd.pem'

key-file: '/opt/etcd/ssl/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/opt/etcd/ssl/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF创建service文件

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

[Service]

Type=notify

ExecStart=/opt/etcd/bin/etcd --config-file=/opt/etcd/cfg/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

EOFsystemctl daemon-reload

systemctl start etcd

systemctl enable etcd复制文件到其他节点

for NODE in k8s-master02 k8s-master03; do scp -r etcd ${NODE}:/opt/;scp /usr/lib/systemd/system/etcd.service root@${NODE}:/usr/lib/systemd/system/; done然后在节点2和节点3分别修改etcd.config.yml配置文件中的节点名称和当前服务器IP:

name: 'k8s-master02' #名称改为当前节点

...

listen-peer-urls: 'https://10.0.0.102:2380' # 改为当节IP

listen-client-urls: 'https://10.0.0.102:2379,http://127.0.0.1:2379' # 改为当节IP

...

initial-advertise-peer-urls: 'https://10.0.0.102:2380' # 改为当节IP

advertise-client-urls: 'https://10.0.0.102:2379' # 改为当节IP

...最后启动etcd并设置开机启动,同k8s-master01。

查看etcd状态

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/etcd-ca.pem --cert=/opt/etcd/ssl/etcd.pem --key=/opt/etcd/ssl/etcd-key.pem --endpoints="https://10.0.0.101:2379,https://10.0.0.102:2379,https://10.0.0.103:2379" endpoint health

https://10.0.0.102:2379 is healthy: successfully committed proposal: took = 33.899098ms

https://10.0.0.103:2379 is healthy: successfully committed proposal: took = 36.90578ms

https://10.0.0.101:2379 is healthy: successfully committed proposal: took = 38.906168ms

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/etcd-ca.pem --cert=/opt/etcd/ssl/etcd.pem --key=/opt/etcd/ssl/etcd-key.pem --endpoints="https://10.0.0.101:2379,https://10.0.0.102:2379,https://10.0.0.103:2379" endpoint health endpoint status --write-out=table

+-------------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+-------------------------+--------+-------------+-------+

| https://10.0.0.101:2379 | true | 21.601571ms | |

| https://10.0.0.102:2379 | true | 28.358331ms | |

| https://10.0.0.103:2379 | true | 31.062415ms | |

+-------------------------+--------+-------------+-------+

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --endpoints="10.0.0.101:2379,10.0.0.102:2379,10.0.0.103:2379" --cacert=/opt/etcd/ssl/etcd-ca.pem --cert=/opt/etcd/ssl/etcd.pem --key=/opt/etcd/ssl/etcd-key.pem endpoint status --write-out=table

+-----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+-----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 10.0.0.101:2379 | 1846d697f0b7e7ce | 3.5.13 | 20 kB | false | false | 2 | 17 | 17 | |

| 10.0.0.102:2379 | e03490914703e5f4 | 3.5.13 | 20 kB | true | false | 2 | 17 | 17 | |

| 10.0.0.103:2379 | b04c42a5dd42d6bb | 3.5.13 | 29 kB | false | false | 2 | 17 | 17 | |

+-----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+安装高可用环境

在三台master中安装这两个软件,VIP就在三台master之间漂

yum -y install keepalived haproxy

# 备份原来的配置文件

mv /etc/haproxy/haproxy.cfg{,.bak}

mv /etc/keepalived/keepalived.conf{,.bak}配置文件

三台配置文件一样。

cat >/etc/haproxy/haproxy.cfg<<"EOF"

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:9443

bind 127.0.0.1:9443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 10.0.0.101:6443 check

server k8s-master02 10.0.0.102:6443 check

server k8s-master03 10.0.0.103:6443 check

EOFKeepalived配置文件(k8s-master01)

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

# 注意网卡名

interface eth0

mcast_src_ip 10.0.0.101

virtual_router_id 51

priority 100

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.0.0.180

}

track_script {

chk_apiserver

} }

EOFk8s-master02配置文件

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

# 注意网卡名

interface eth0

mcast_src_ip 10.0.0.102

virtual_router_id 51

priority 80

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.0.0.180

}

track_script {

chk_apiserver

} }

EOFk8s-master03配置文件

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

# 注意网卡名

interface eth0

mcast_src_ip 10.0.0.103

virtual_router_id 51

priority 50

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.0.0.180

}

track_script {

chk_apiserver

} }

EOFKeepalived监测脚本

cat > /etc/keepalived/check_apiserver.sh << EOF

#!/bin/bash

err=0

for k in \$(seq 1 3)

do

check_code=\$(pgrep haproxy)

if [[ \$check_code == "" ]]; then

err=\$(expr \$err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ \$err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF启动服务

systemctl enable haproxy

systemctl enable keepalived

systemctl start haproxy

systemctl start keepalived此时你有一个节点上应该已经有VIP了,端口为9443

apiserver安装

https://dl.k8s.io/v1.30.0/kubernetes-server-linux-amd64.tar.gz解压二进制包

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

[root@master01 opt]# tar -xvf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /opt/kubernetes/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

kubernetes/server/bin/kube-scheduler

kubernetes/server/bin/kubelet

kubernetes/server/bin/kube-controller-manager

kubernetes/server/bin/kube-proxy

kubernetes/server/bin/kubectl

kubernetes/server/bin/kube-apiserver

[root@master01 opt]# ls /opt/kubernetes/bin/

kube-apiserver kubectl kube-proxy

kube-controller-manager kubelet kube-scheduler

#把kubelet,kubectl复制到/usr/bin下

mv /opt/kubernetes/bin/kubelet /usr/bin/

mv /opt/kubernetes/bin/kubectl /usr/bin/准备Kubernetes证书

自签证书颁发机构(CA)

写入生成证书所需的配置文件

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

生成证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

[root@master01 k8s]# ll

total 16

-rw-r--r-- 1 root root 1074 Apr 18 20:29 ca.csr

-rw-r--r-- 1 root root 267 Apr 18 20:28 ca-csr.json

-rw------- 1 root root 1679 Apr 18 20:29 ca-key.pem

-rw-r--r-- 1 root root 1367 Apr 18 20:29 ca.pem使用自签CA签发kube-apiserver HTTPS证书

cat > apiserver-csr.json << EOF

{

"CN": "kube-apiserver",

"hosts":[

"10.96.0.1",

"10.0.0.180",

"127.0.0.1",

"10.0.0.101",

"10.0.0.102",

"10.0.0.103",

"10.0.0.110",

"10.0.0.111",

"10.0.0.112",

"10.0.0.113",

"10.0.0.114",

"10.0.0.115",

"10.0.0.116",

"10.0.0.117",

"10.0.0.118",

"10.0.0.119",

"10.0.0.120",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

]

}

EOF注:上述文件hosts字段中IP为所有Master/LB/VIP IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

生成证书:

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json \

-profile=kubernetes apiserver-csr.json | cfssljson -bare apiserver创建ServiceAccount Key ——secret

openssl genrsa -out sa.key 2048

openssl rsa -in sa.key -pubout -out sa.pub聚合证书

cat > front-proxy-ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"ca": {

"expiry": "876000h"

}

}

EOF

cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare front-proxy-ca

cat > front-proxy-client-csr.json << EOF

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

cfssl gencert \

-ca=front-proxy-ca.pem \

-ca-key=front-proxy-ca-key.pem \

-config=../etcd/ca-config.json \

-profile=kubernetes front-proxy-client-csr.json | cfssljson -bare front-proxy-client

复制证书相关文件

cp ca.pem apiserver*pem front-proxy-ca.pem front-proxy-client*pem ca-key.pem /opt/kubernetes/ssl/

cp sa.* /opt/kubernetes/ssl/准备kubernetes目录

mkdir -p /etc/kubernetes/manifests/ /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes准备service文件

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-apiserver \\

--v=2 \\

--allow-privileged=true \\

--bind-address=10.0.0.101 \\

--secure-port=6443 \\

--advertise-address=10.0.0.101 \\

--service-cluster-ip-range=10.96.0.0/12 \\

--service-node-port-range=30000-32767 \\

--etcd-servers=https://10.0.0.101:2379,https://10.0.0.102:2379,https://10.0.0.103:2379 \\

--etcd-cafile=/opt/etcd/ssl/etcd-ca.pem \\

--etcd-certfile=/opt/etcd/ssl/etcd.pem \\

--etcd-keyfile=/opt/etcd/ssl/etcd-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--tls-cert-file=/opt/kubernetes/ssl/apiserver.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/apiserver-key.pem \\

--kubelet-client-certificate=/opt/kubernetes/ssl/apiserver.pem \\

--kubelet-client-key=/opt/kubernetes/ssl/apiserver-key.pem \\

--service-account-key-file=/opt/kubernetes/ssl/sa.pub \\

--service-account-signing-key-file=/opt/kubernetes/ssl/sa.key \\

--service-account-issuer=https://kubernetes.default.svc.cluster.local \\

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction,DefaultStorageClass,DefaultTolerationSeconds \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--requestheader-client-ca-file=/opt/kubernetes/ssl/front-proxy-ca.pem \\

--proxy-client-cert-file=/opt/kubernetes/ssl/front-proxy-client.pem \\

--proxy-client-key-file=/opt/kubernetes/ssl/front-proxy-client-key.pem \\

--requestheader-allowed-names=aggregator \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-username-headers=X-Remote-User \\

--enable-aggregator-routing=true

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOFsystemctl daemon-reload

systemctl start kube-apiserver

systemctl enable kube-apiserver[root@master01 k8s]# systemctl status kube-apiserver.service

● kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2024-04-18 22:37:20 CST; 3min 48s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 6683 (kube-apiserver)

Tasks: 8

Memory: 188.1M

CGroup: /system.slice/kube-apiserver.service

└─6683 /opt/kubernetes/bin/kube-apiserver --v=2 --allow-privile...

Apr 18 22:37:23 master01 kube-apiserver[6683]: I0418 22:37:23.361225 66...

Apr 18 22:37:23 master01 kube-apiserver[6683]: [-]poststarthook/rbac/boots...

Apr 18 22:37:23 master01 kube-apiserver[6683]: I0418 22:37:23.461611 66...

Apr 18 22:37:23 master01 kube-apiserver[6683]: [-]poststarthook/rbac/boots...

Apr 18 22:37:23 master01 kube-apiserver[6683]: I0418 22:37:23.561377 66...

Apr 18 22:37:23 master01 kube-apiserver[6683]: [-]poststarthook/rbac/boots...

Apr 18 22:37:23 master01 kube-apiserver[6683]: W0418 22:37:23.672368 66...

Apr 18 22:37:23 master01 kube-apiserver[6683]: I0418 22:37:23.673794 66...

Apr 18 22:37:23 master01 kube-apiserver[6683]: I0418 22:37:23.682482 66...

Apr 18 22:40:42 master01 kube-apiserver[6683]: I0418 22:40:42.261559 66...

Hint: Some lines were ellipsized, use -l to show in full.

部署kubectl

生成证书

创建csr请求文件

cat > admin-csr.json << EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "system:masters",

"OU": "Kubernetes-manual"

}

]

}

EOF生成证书

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=../etcd/ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare admin创建kubeconfig配置文件

kubeconfig 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书

设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://10.0.0.180:9443 \

--kubeconfig=/opt/kubernetes/cfg/admin.kubeconfig设置认证参数

kubectl config set-credentials kubernetes-admin \

--client-certificate=admin.pem \

--client-key=admin-key.pem \

--embed-certs=true \

--kubeconfig=/opt/kubernetes/cfg/admin.kubeconfig设置上下文参数

kubectl config set-context kubernetes-admin@kubernetes \

--cluster=kubernetes \

--user=kubernetes-admin \

--kubeconfig=/opt/kubernetes/cfg/admin.kubeconfig设置默认上下文

kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=/opt/kubernetes/cfg/admin.kubeconfigTLS Bootstrapping配置

设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=ca.pem \

--embed-certs=true --server=https://10.0.0.180:9443 \

--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig设置认证参数

kubectl config set-credentials tls-bootstrap-token-user \

--token=8vhqpf.pe6vl8kffc32acfu \

--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig设置上下文

kubectl config set-context tls-bootstrap-token-user@kubernetes \

--cluster=kubernetes \

--user=tls-bootstrap-token-user \

--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig设置默认上下文

kubectl config use-context tls-bootstrap-token-user@kubernetes \

--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig将配置文件复制到root用户环境

mkdir -p /root/.kube;cp /opt/kubernetes/cfg/admin.kubeconfig /root/.kube/config需要执行:kubectl apply -f bootstrap.secret.yaml

此时可以用kubectl进行操作了

实验环境中我先安装了控制器和调度器不影响

[root@master01 k8s]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy ok安装controller-manage

生成controller-manage证书

cat > manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "system:kube-controller-manager",

"OU": "Kubernetes-manual"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=../etcd/ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare controller-manager创建kube-controller-manager的kubeconfig

设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://10.0.0.180:9443 \

--kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig设置客户端认证参数

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=controller-manager.pem \

--client-key=controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig设置上下文参数

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig设置默认上下文

kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig配置kube-controller-manager service

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-controller-manager \\

--v=2 \\

--bind-address=0.0.0.0 \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/sa.key \\

--kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig \\

--leader-elect=true \\

--use-service-account-credentials=true \\

--node-monitor-grace-period=40s \\

--node-monitor-period=5s \\

--controllers=*,bootstrapsigner,tokencleaner \\

--allocate-node-cidrs=true \\

--service-cluster-ip-range=10.96.0.0/12 \\

--cluster-cidr=10.244.0.0/12 \\

--node-cidr-mask-size-ipv4=24 \\

--requestheader-client-ca-file=/opt/kubernetes/ssl/front-proxy-ca.pem \\

--cluster-name=kubernetes \\

--horizontal-pod-autoscaler-sync-period=10s

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF部署kube-scheduler

生成kube-scheduler的证书

cat > scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "system:kube-scheduler",

"OU": "Kubernetes-manual"

}

]

}

EOF生成证书

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=../etcd/ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare scheduler创建kube-scheduler的kubeconfig

设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://10.0.0.180:9443 \

--kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig设置认证参数

kubectl config set-credentials system:kube-scheduler \

--client-certificate=scheduler.pem \

--client-key=scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig设置上下文参数

kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig设置默认上下文

kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig配置kube-scheduler service

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-scheduler \\

--v=2 \\

--bind-address=0.0.0.0 \\

--leader-elect=true \\

--kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF部署kube-proxy

创建csr请求文件

cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "system:kube-proxy",

"OU": "Kubernetes-manual"

}

]

}

EOF生成证书

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=../etcd/ca-config.json \

-profile=kubernetes \

kube-proxy-csr.json | cfssljson -bare kube-proxy设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://10.0.0.180:9443 \

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig设置认证参数

kubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/ssl/kube-proxy.pem \

--client-key=/opt/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig设置上下文参数

kubectl config set-context kube-proxy@kubernetes \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig设置默认上下文

kubectl config use-context kube-proxy@kubernetes --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig创建service文件

cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/opt/kubernetes/bin/kube-proxy \\

--config=/opt/kubernetes/cfg/kube-proxy.yaml \\

--cluster-cidr=10.244.0.0/12 \\

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF创建kube-proxy.yaml配置文件

cat > /opt/kubernetes/cfg/kube-proxy.yaml << EOF

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

qps: 5

clusterCIDR: 10.244.0.0/12

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

masqueradeAll: true

minSyncPeriod: 5s

scheduler: "rr"

syncPeriod: 30s

strictARP: true

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250ms

EOF配置开机启用

systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy

systemctl status kube-proxy部署kubelet

mkdir -p /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d /etc/kubernetes/manifests/创建service文件

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=network-online.target firewalld.service containerd.service

Wants=network-online.target

Requires=containerd.service

[Service]

ExecStart=/usr/bin/kubelet \\

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig \\

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\

--config=/opt/kubernetes/cfg/kubelet-conf.yml \\

--container-runtime-endpoint=unix:///run/containerd/containerd.sock \\

--node-labels=node.kubernetes.io/node=

[Install]

WantedBy=multi-user.target

EOF所有节点创建kubelet配置文件

cat > /opt/kubernetes/cfg/kubelet-conf.yml <<EOF

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

EOF配置开机启动

systemctl daemon-reload

systemctl enable kubelet

systemctl start kubelet

systemctl status kubelet安装网络组件

前置条件

centos7需要安装libseccomp

升级runc

#https://github.com/opencontainers/runc/releases

wget https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64

install -m 755 runc.amd64 /usr/local/sbin/runc

cp -p /usr/local/sbin/runc /usr/local/bin/runc

cp -p /usr/local/sbin/runc /usr/bin/runc下载高于2.4以上的包

yum -y install http://rpmfind.net/linux/centos/8-stream/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm

# 清华源

yum -y install https://mirrors.tuna.tsinghua.edu.cn/centos/8-stream/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm查看当前版本

rpm -qa | grep libseccomp安装calico

修改配置

vim calico.yaml

# calico-config ConfigMap处

"ipam": {

"type": "calico-ipam",

},

- name: IP

value: "autodetect"

- name: CALICO_IPV4POOL_CIDR

value: "172.16.0.0/12"sed -i "s#docker.io/calico/#m.daocloud.io/docker.io/calico/#g" calico.yaml

kubectl apply -f calico.yaml

# calico 初始化会很慢 需要耐心等待一下,大约十分钟左右

[root@k8s-master01 ~]# kubectl get pod -A部署coreDns

https://github.com/coredns/deployment/blob/master/kubernetes/coredns.yaml.sed

kubernetes cluster.local in-addr.arpa ip6.arpa

forward . /etc/resolv.conf

clusterIP为:172.135.0.2(kubelet配置文件中的clusterDNS)

#大约在73行去掉`STUBDOMAINS`

#镜像源可以用:m.daocloud.io/docker.io/coredns/kubectl apply -f coredns.yaml

#测试DNS是否正常工作

kubectl run -i --tty --rm debug --image=busybox --restart=Never -- /bin/sh

nslookup kubernetes

Server: 10.96.0.10

Address: 10.96.0.10:53

** server can't find kubernetes.cluster.local: NXDOMAIN

Name: kubernetes.default.svc.cluster.local

Address: 10.96.0.1

** server can't find kubernetes.cluster.local: NXDOMAIN

** server can't find kubernetes.svc.cluster.local: NXDOMAIN

** server can't find kubernetes.svc.cluster.local: NXDOMAIN部署master02,master03

准备运行环境

在master02,03上执行

mkdir /opt/kubernetes/{bin,cfg,logs,ssl} -p

mkdir ~/.kube升级02,03节点的runc,具体操作参照:

参照安装网络插件的前置条件一并执行一次

同步文件

将master01上的文件同步给02,03

Master='k8s-master02 k8s-master03'

# 拷贝master组件

for NODE in $Master; do echo $NODE; scp /usr/bin/kube{let,ctl} $NODE:/usr/bin/; scp /opt/kubernetes/bin/* $NODE:/opt/kubernetes/bin/; done

#复制证书

for NODE in $Master; do echo $NODE; scp /opt/kubernetes/ssl/* $NODE:/opt/kubernetes/ssl/; done

#复制配置文件

for NODE in $Master; do echo $NODE; scp /opt/kubernetes/cfg/* $NODE:/opt/kubernetes/cfg/; done

#复制kubectl config

for NODE in $Master; do echo $NODE; scp ~/.kube/config $NODE:/root/.kube/; done

#复制runc

for NODE in $Master; do echo $NODE; scp /opt/runc.amd64 $NODE:/opt/; done删除02,03节点的kubelet.kubeconfig

rm kubernetes/cfg/kubelet.kubeconfig复制service文件

for NODE in $Master; do echo $NODE; scp /usr/lib/systemd/system/kube* $NODE:/usr/lib/systemd/system/; done修改kube-apiserver.service中的IP地址

bind-address = 当前节点IP

advertise-address = 当前节点IP设置开机启动

systemctl daemon-reload

systemctl start kube-apiserver.service

systemctl start kube-controller-manager.service

systemctl start kube-scheduler.service

systemctl start kube-proxy.service

systemctl start kubelet.service

systemctl enable kube-apiserver.service

systemctl enable kube-controller-manager.service

systemctl enable kube-scheduler.service

systemctl enable kube-proxy.service

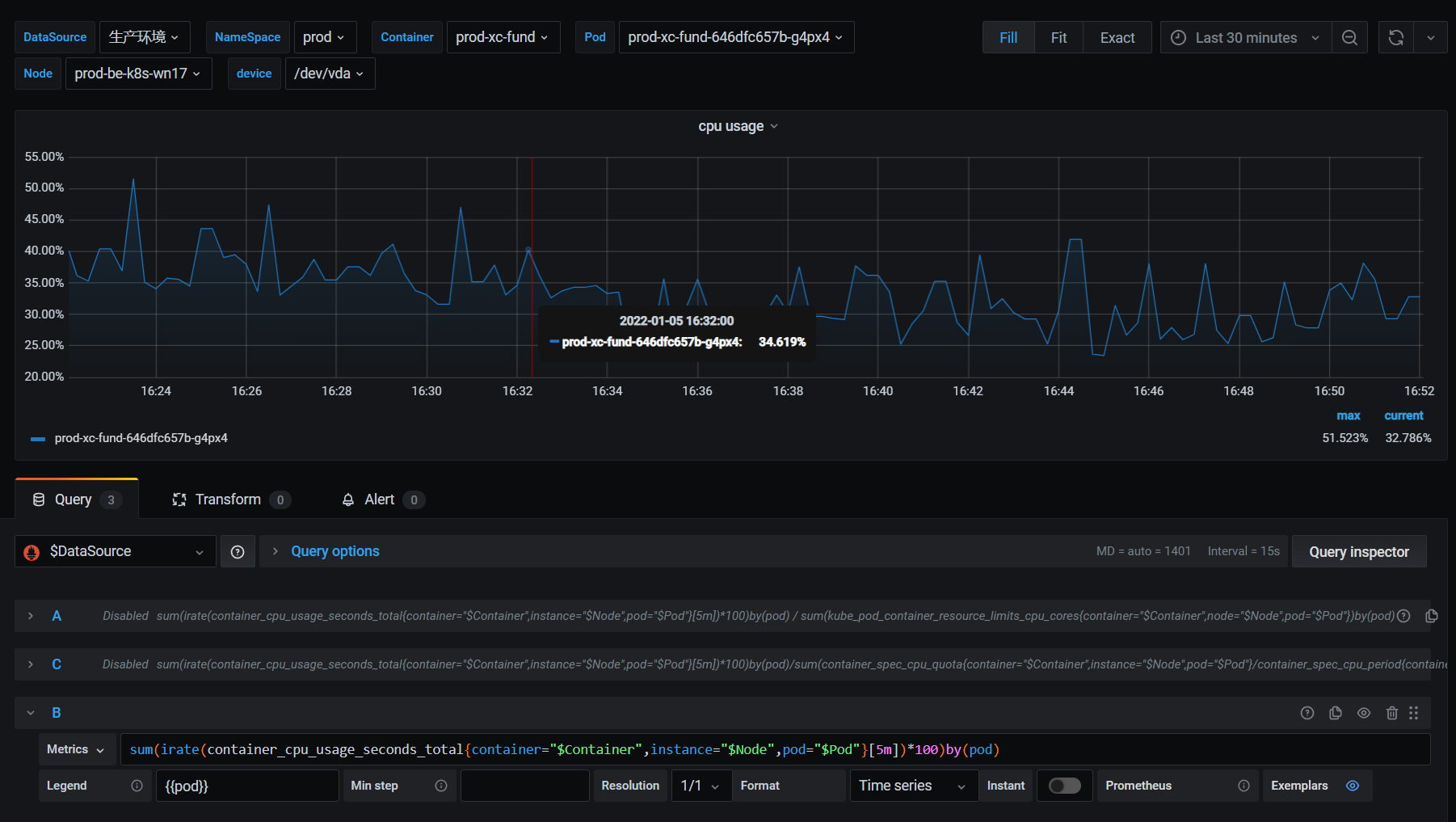

systemctl enable kubelet.service安装Metrics Server

在新版的Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率

https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml部署

# 修改配置

vim components.yaml

---

# 1

- args:

- --cert-dir=/tmp

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

- --requestheader-client-ca-file=/opt/kubernetes/ssl/front-proxy-ca.pem

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

# 2

volumeMounts:

- mountPath: /tmp

name: tmp-dir

- name: ca-ssl

mountPath: /opt/kubernetes/ssl

# 3

volumes:

- emptyDir: {}

name: tmp-dir

- name: ca-ssl

hostPath:

path: /opt/kubernetes/ssl

---

# 修改为国内源 docker源可选

sed -i "s#registry.k8s.io/#m.daocloud.io/registry.k8s.io/#g" components.yaml

# 执行部署

kubectl apply -f components.yaml查看状态

[root@master01 opt]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master01 488m 24% 1157Mi 62%

master02 414m 20% 1122Mi 60%

master03 440m 22% 1084Mi 58%安装helm

#下载地址

https://github.com/helm/helm/releases

https://get.helm.sh/helm-v3.14.4-linux-amd64.tar.gz

tar xvf helm-v3.14.4-linux-amd64.tar.gz

mv linux-amd64/helm /usr/bin/

chmod +x /usr/bin/helm

for NODE in $Master; do echo $NODE; scp /usr/bin/helm $NODE:/usr/bin/; done部署Metallb

安装前确保kube-proxy的strictARP为true

https://github.com/metallb/metallb/blob/v0.14.5/config/manifests/metallb-frr.yaml

#保存为metallb.yaml

sed -i "s#quay.io/#m.daocloud.io/quay.io/#g" metallb.yaml

kubectl apply -f metallb.yaml查看

[root@master01 opt]# kubectl get pod -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-7b6fdc45cb-cg5js 1/1 Running 1 (5m50s ago) 6m41s

speaker-5wjq4 4/4 Running 0 6m40s

speaker-llglk 4/4 Running 1 (57s ago) 6m40s

speaker-wwxg2 4/4 Running 1 (47s ago) 6m41s设置本地负载均衡器的IP范围,这个IP必须和节点是一个网段,这段IP最好设为保留段。

cat > metallb-config.yaml << EOF

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: nat

namespace: metallb-system

spec:

addresses:

- 10.0.0.210-10.0.0.250

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: empty

namespace: metallb-system

EOF现在具备为负载均衡器IP分配能力了

ingress安装

https://github.com/kubernetes/ingress-nginx/blob/main/deploy/static/provider/cloud/deploy.yaml

#保存为ingress-nginx.yaml

sed -i "s#registry.k8s.io/#m.daocloud.io/registry.k8s.io/#g" ingress-nginx.yaml创建默认后端

cat > backend.yaml << EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: default-http-backend

labels:

app.kubernetes.io/name: default-http-backend

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: default-http-backend

template:

metadata:

labels:

app.kubernetes.io/name: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

image: guilin2014/ingress-nginx-error:v0.1

livenessProbe:

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 80

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

---

apiVersion: v1

kind: Service

metadata:

name: default-http-backend

namespace: kube-system

labels:

app.kubernetes.io/name: default-http-backend

spec:

ports:

- port: 80

targetPort: 80

selector:

app.kubernetes.io/name: default-http-backend

EOFkubectl apply -f ingress-nginx.yaml

kubectl apply -f backend.yaml在deployment启动参数中增加

- --default-backend-service=ingress-nginx/default-http-backend查看svc是否获取到了IP

[root@master01 opt]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.108.242.243 10.0.0.210 80:31342/TCP,443:31119/TCP 36m

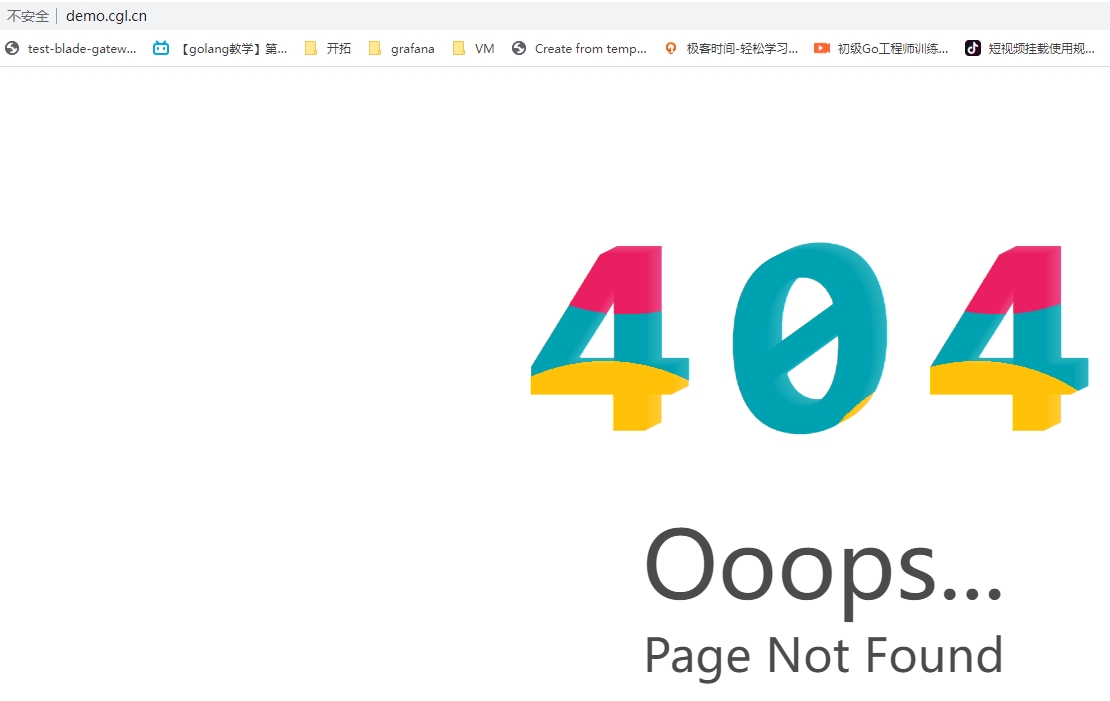

ingress-nginx-controller-admission ClusterIP 10.110.238.147 <none> 443/TCP此时随便解析一下IP到10.0.0.210,打开后会显示自定义页面

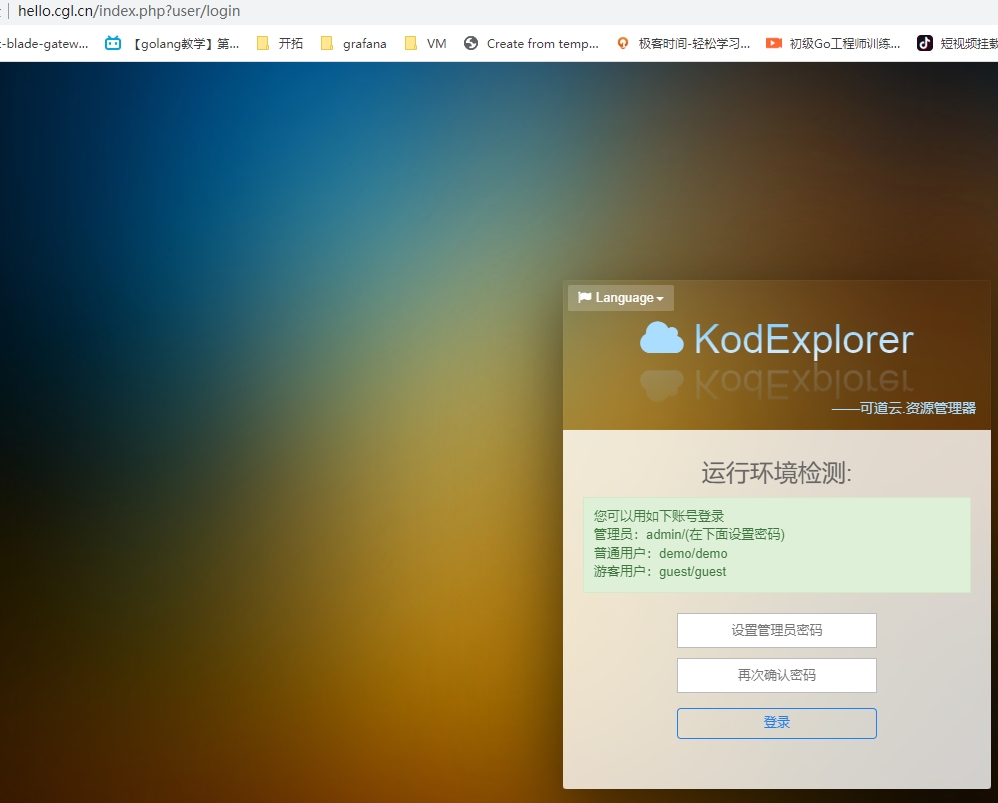

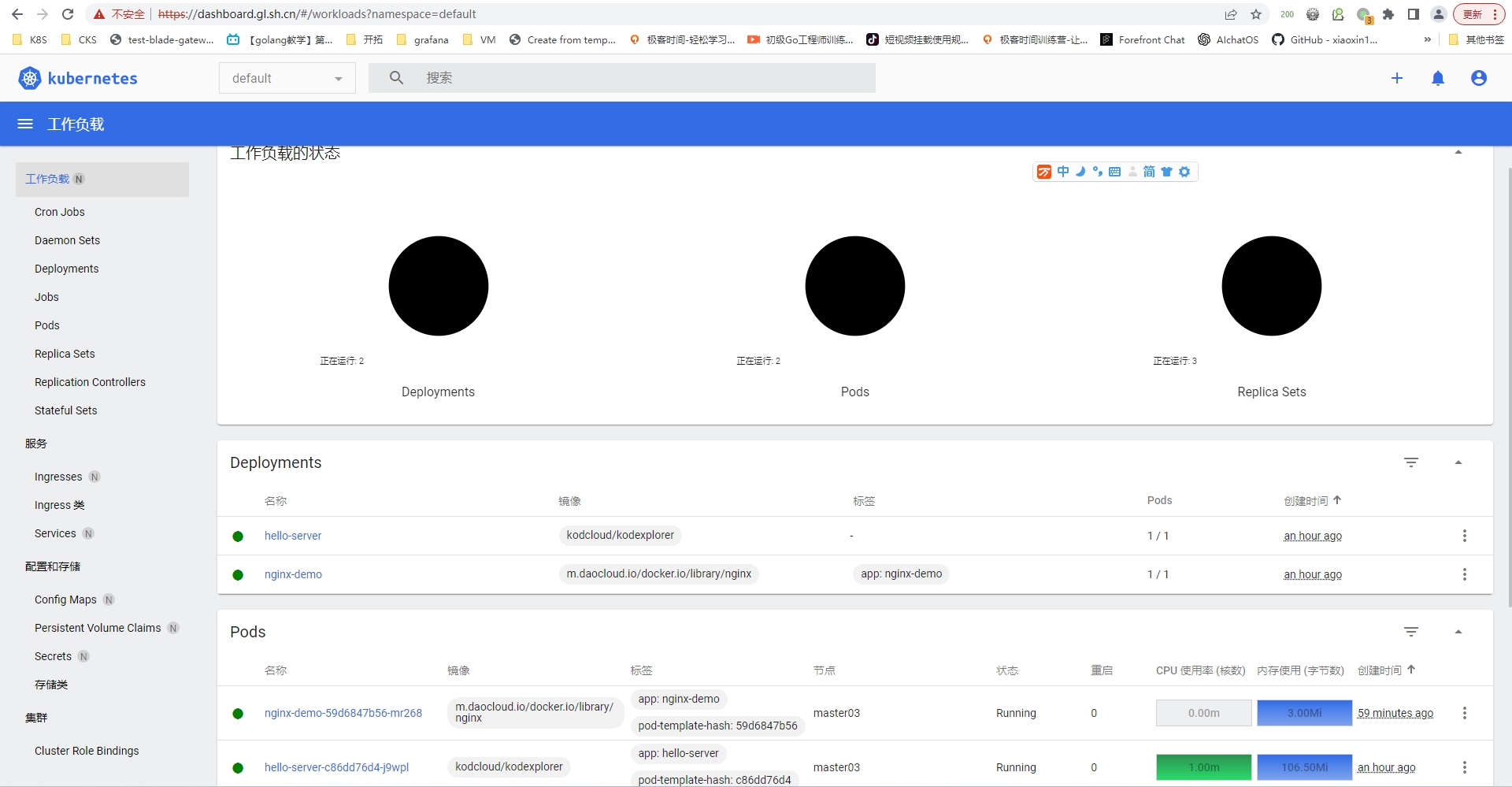

测试ingress-nginx

cat > ingress-demo-app.yaml << EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-server

spec:

replicas: 2

selector:

matchLabels:

app: hello-server

template:

metadata:

labels:

app: hello-server

spec:

containers:

- name: hello-server

image: kodcloud/kodexplorer

ports:

- containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-demo

template:

metadata:

labels:

app: nginx-demo

spec:

containers:

- image: nginx

name: nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

selector:

app: nginx-demo

ports:

- port: 8000

protocol: TCP

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

labels:

app: hello-server

name: hello-server

spec:

selector:

app: hello-server

ports:

- port: 8000

protocol: TCP

targetPort: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.cgl.cn"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.cgl.cn"

http:

paths:

- pathType: Prefix

path: "/nginx"

backend:

service:

name: nginx-demo

port:

number: 8000

EOF应用

kubectl apply -f ingress-demo-app.yaml查看ingress

[root@master01 opt]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-host-bar nginx hello.cgl.cn,demo.cgl.cn 10.0.0.210 80 27s域名解析到10.0.0.210即可。

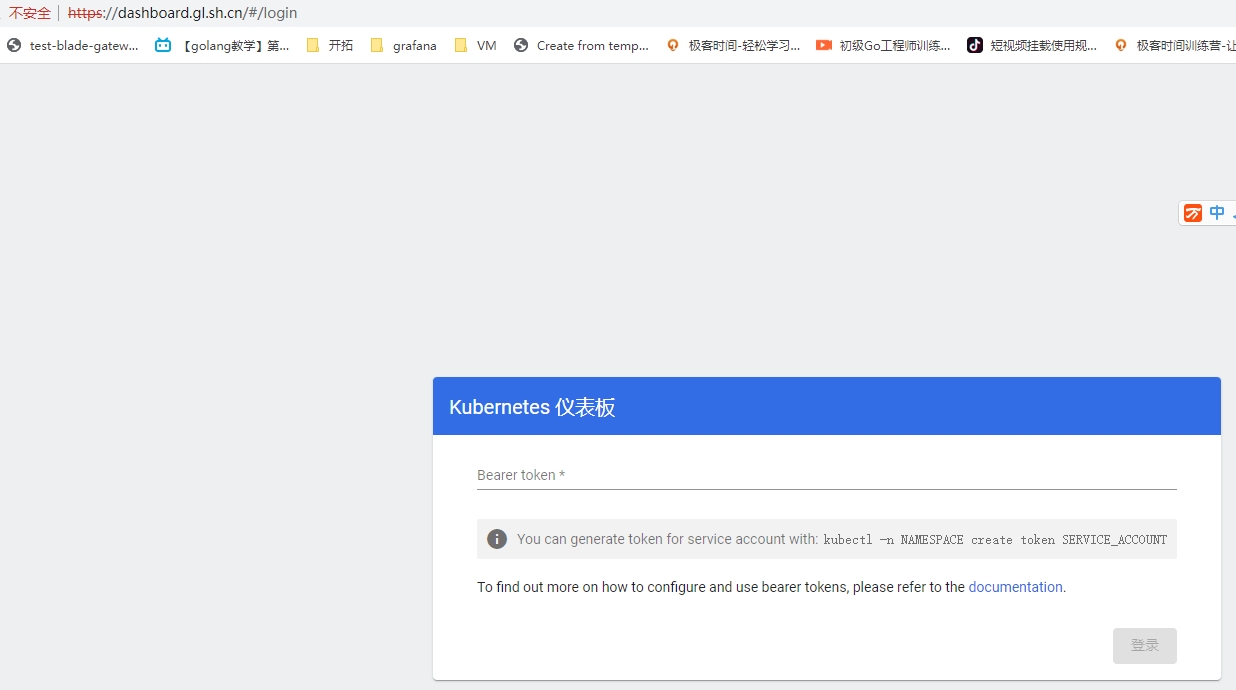

安装dashboard

在master01上执行即可

helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

helm upgrade --install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --create-namespace --namespace kubernetes-dashboard上面是官方默认安装

测试可以用我下面的方法

helm upgrade --install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --set web.image.repository=m.daocloud.io/docker.io/kubernetesui/dashboard-web --set api.image.repository=m.daocloud.io/docker.io/kubernetesui/dashboard-api --set auth.image.repository=m.daocloud.io/docker.io/kubernetesui/dashboard-auth --set metricsScraper.image.repository=m.daocloud.io/docker.io/kubernetesui/dashboard-metrics-scraper --create-namespace --namespace kubernetes-dashboard生成tls证书

openssl genpkey -algorithm RSA -out private.key

openssl req -new -key private.key -out csr.csr生产csr文件会要求输入地区国家什么的,按要求输入即可。

san.cnf文件内容如下

ions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[v3_req]

subjectAltName = @alt_names

[alt_names]

DNS.1 = dashboard.gl.sh.cn

DNS.2 = www.dashboard.gl.sh.cnopenssl x509 -req -in csr.csr -signkey private.key -out certificate.crt -extfile san.cnf -extensions v3_req -days 9999执行后生成以下文件

[root@master01 opt]# ls ssl/pki/dashboard/

certificate.crt csr.csr private.key san.cnf创建ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kubernetes-dashboard-ingress

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-passthrough: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

namespace: kubernetes-dashboard

spec:

ingressClassName: nginx

tls:

- hosts:

- dashboard.gl.sh.cn

secretName: dashboard-secret

rules:

- host: "dashboard.gl.sh.cn"

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: kubernetes-dashboard-kong-proxy

port:

number: 443

查看端口号

[root@master01 opt]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard-api ClusterIP 10.97.88.235 <none> 8000/TCP 27m

kubernetes-dashboard-auth ClusterIP 10.110.229.244 <none> 8000/TCP 27m

kubernetes-dashboard-kong-manager NodePort 10.103.200.37 <none> 8002:31269/TCP,8445:32767/TCP 27m

kubernetes-dashboard-kong-proxy ClusterIP 10.111.45.135 <none> 443/TCP 27m

kubernetes-dashboard-metrics-scraper ClusterIP 10.102.155.109 <none> 8000/TCP 27m

kubernetes-dashboard-web ClusterIP 10.102.134.43 <none> 8000/TCP 27m创建token

cat > dashboard-user.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

EOF

kubectl apply -f dashboard-user.yaml

# 创建token,一次性

kubectl -n kube-system create token admin-user

eyJhbGciOiJSUzI1NiIsImtpZCI6ImRpcWhSYjN1enV4Q3FsakZibDZ0cmhfc2lWLVotOWFZOG1oemxzUENvWmMifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzE0NjM3OTE0LCJpYXQiOjE3MTQ2MzQzMTQsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwianRpIjoiMTI4ZWJiNDUtNzU2ZS00NGUzLWE0ZGQtMDFlYmEwMDljYjcxIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiMzdjZjM1YjItMTM3Ny00YmU4LTkzZjctMmQ5MjAwN2YzNTAyIn19LCJuYmYiOjE3MTQ2MzQzMTQsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbi11c2VyIn0.eK-p1ioL00tmIOToiJpcs9M7MiUzGfaIa6kZgGsxKreH75NpgmrTsFRAUslEm06rDwBupeLV0dyN4gttJxHsXYAZGMqdC6IcAshAzUP_-zfq6yM_pfXUeNM4zDD8hbbncYdSIjCFO6YxzzfFAUuP6cN8joJIsQ4qfGc3FpJ8viGbrED7QS9CgFZWlc8W9lJaYhyjCAXQg02YLuwVCJBbbB5-Ghc4G-8tcibmuShPr0CKhovOimJy-ep7yr49y3t2dXK3ydd2AxsC2Eds9_sJbWGp4dktcSNYgC7bUU8h6jar1CBCK7VYLf5sFxR_cYWDWo_8_Ue3gI2EShd3ZRojVA

1.24 以后不再创建secret 了。那么我们其实可以手动创建secret, 关联好serviceaccount, 让k8s帮我们填好永不过期token就可以了。

apiVersion: v1

kind: Secret

metadata:

name: admin-user

namespace: kube-system

annotations:

kubernetes.io/service-account.name: "admin-user"

type: kubernetes.io/service-account-tokenkubernetes.io/service-account.name 这个key所对应的值就代表其所需要关联的serviceaccount。当我们创建好secret之后, 就可以看到k8s会自动帮我们填充好token,ca.crt等信息。此时生成的token就永不过期了,我们就可以拿着这个token来访问k8s, apisever了。

至少差不多了,后续将写一些测试来验证集群,并继续更新:

- docker作为Runtime

- 包括ipv6的测试

- cilium网络插件

敬请期待

相关文件下载

Asynq任务框架

Asynq任务框架 MCP智能体开发实战

MCP智能体开发实战 WEB架构

WEB架构 安全监控体系

安全监控体系